Audio Plugins in JavaScript

With Elementary Audio, you can now write audio plugins in JavaScript, in real time, inside a DAW (Digital Audio Workstation) using the same web technology that you've come to know and love. The Elementary Plugin Dev Kit is now in private beta, visit the website to sign up for the waitlist to be notified when it's ready.

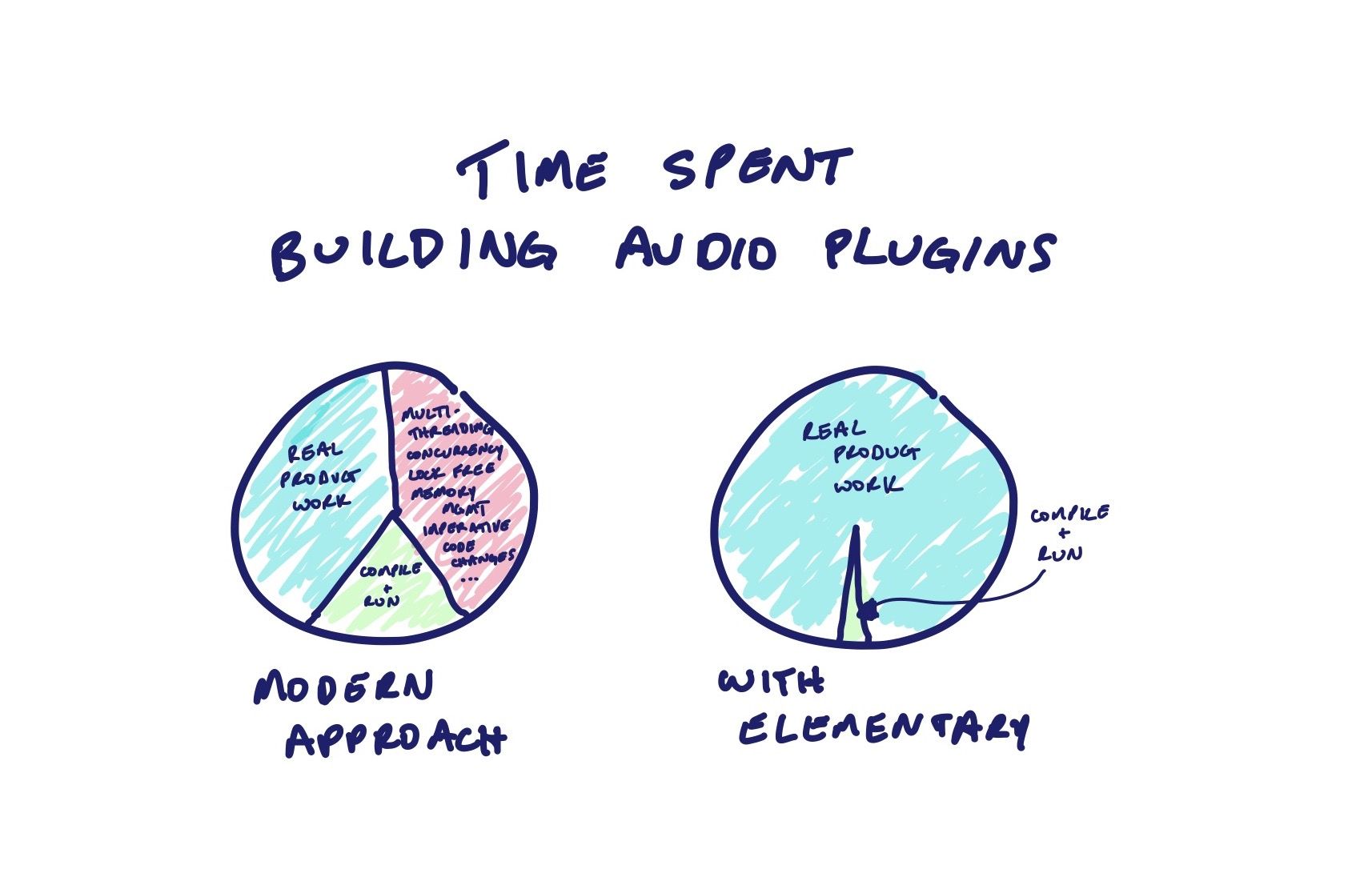

I've shared before some of the overarching goals of Elementary as a toolset and platform, but with this announcement I wanted to revisit some of those goals in a more concrete sense. I briefly asked around a group of audio plugin developers what they found to be the most difficult parts of delivering a plugin, or what parts might be the most time consuming. The answer was a resounding refrain: iterating on products is slow, the cost of changing the code at each step is high, and the way that we communicate state between the processor and the interface is just difficult.

In this article, I want to visit each of those sentiments in detail and explain how Elementary aims to address them, within the context of building and shipping an audio plugin. I'm confident that Elementary can eliminate, or at least mitigate, huge pieces of complexity and pain points along the path to delivering an audio plugin. In doing so, I hope to invite new developers into this space, and refocus on the fun, creative process in building software like this.

Finally, I presented the same discussion at a recent meetup on The Audio Programmer with a brief code walkthrough and demonstration at each point, if you prefer a more visual introduction.

Iterating is Slow

One of the most common refrains that I've heard in discussing the difficulties of writing audio plugins is that iterating on user interfaces is slow. On the surface, I think that's undeniable: there's a lot to manage in any user interface of non-trivial scale, and on top of that, development frequently has to react to iterations at the design and UX level. But this is a particularly difficult situation when the cost of iteration at each step is high, which I would argue is exactly this case in the conventional approach to writing audio plugins.

To start, the edit-compile-run loop in C/C++ is brutally slow. It seems to me that whenever there is actually a compile step in this feedback loop, we need a ton of additional tooling to make the compile step's contribution to this loop invisible. I haven't yet seen that tooling in the audio plugin space. Besides that, minimizing the compile step's contribution to the feedback loop often drags in additional baggage: we start spending time making sure that the way we write our C++ allows for a quick compile step (oh, the number of PR discussions I've seen debating whether or not to introduce template metaprogramming to solve a particular problem), or that our repository is structured in such a way that we can compile our user interface without compiling the signal processing pieces. What's more, when writing a plugin, we often want our "run" step to include opening up a DAW from a template project which will load our plugin. Add this up and we can already justify the statement that iterating on user interfaces is slow.

But it gets worse. Suppose we've written a first pass at a user interface, with all of the best C/C++ object oriented practices, for a multi-effect unit which includes a reverb, and that reverb includes a filter embedded in its signal flow. Our interface includes a panel with knobs which reflects the reverb stage, and some of those knobs correspond to the embedded filter coefficients. Then, we get a product design request: we're going to move the filter to a different stage of the signal chain, and therefore the knobs which control the filter should no longer be seated in the reverb panel (that would be unintuitive); we'll move them to a different part of the interface.

At a minimum, a change like this typically involves removing some lines of code which read something like addAndMakeVisible(knob1), which had established our component heirarchy, and replacing them with new addAndMakeVisible lines elsewhere. Then, owing to that we have an imperative layout procedure, we'll have to update our reverb panel to remove those lines of code which read knob1.setBounds(leftEdge, topEdge, knob1Width, knob1Height), and accordingly rewrite all of the bounds for all of the other components in our reverb unit, which now need to be nudged around to fill the space vacated by these filter knobs. And of course, we repeat a similar process in the panel which makes a new home for the filter knobs, setting each knobs bounds accordingly and updating the bounds for all of the knobs new neighbors to make sure there's room for it. So far, we're detailing what I would call pure overhead due simply to imperative programming techniques.

Of course, we're not done yet. This is C/C++, so we need to ensure that all of our objects are created and owned in a sensible place, and that they survive any other objects who may carry a reference back to them. In version one, perhaps our reverb panel, which we had neatly prepared as a class of its own, constructed and maintained ownership of the knob components that we were using for the filter. Now that we're moving those knobs, should the reverb panel still be the one carrying this responsibility? Perhaps it should be the new parent class who constructs and owns these knobs. Here lives an extra set of imperative code changes. But we had originally planned for this reverb panel to be modular and reusable, so that a potential future product could reuse the same reverb and the same reverb panel in its user interface: should we try to leave the door open for that eventual reality? Maybe our reverb panel should own the filter knobs? Or maybe it should at least construct them and maintain ownership in the case that we default construct our reverb panel. And maybe we'll add a second constructor which takes references to knob components who live elsewhere so that we know when its our responsibility and when its not. Every one of these questions and each of these options represent unnecessary overhead.

Unfortunately these examples aren't hypothetical. I'm sure you can find teams at big companies who've built proprietary, in-house solutions to problems like these who could refute this whole example. Or maybe you can tell me that if I was simply a 10x C/C++ software developer I wouldn't hit problems like these in the first place. But the reality of my experience is that there are so many developers in the audio plugin space who write their software just like this (myself included, at first), and incur the cost of this overhead repeatedly, project after project.

In the frontend web development landscape, problems like this vanish. The entire category of lifetime and ownership questions dissappears to the garbage collector. Interface heirarchy is expressed declaratively (HTML, or React, for example), and a change to the structure is often as simple as moving a single line of code up or down within that structure. Layout is expressed declaratively in terms of a set of rules and constraints: moving a child component to a new parent often automatically resolves itself in terms of layout, and when it doesn't, describing a new set of rules for that scenario can regularly be a tiny code change. Moreover, the edit-compile-run feedback loop is blazingly fast (due in part to excellent tooling and in part to using interpreted languages), often updating my screen before I can even move my focus away from my editor.

It's within this landscape that I've seen the best answer (so far) to the problem that iterating on a user interface is slow. I find the frontend web developer experience amazing; I've tried before to bring some of these solutions into the audio plugin space, and I intend to keep trying. For that reason, the Elementary Plugin Dev Kit hosts a complete web environment within which you can develop your plugins. In the presentation I mentioned above, I demonstrated using create-react-app itself for my plugin UIs, complete with hot reloading (inside the plugin, inside the DAW), and the comforts of npm and the frontend web ecosystem. This is not a new approach for developing user interfaces in a plugin, it powers at least one of the most popular plugins on the stage today, but paired with Elementary, as we'll see in the following sections, I think this approach takes another huge step forward.

Iterating on DSP Can Be Better Too

We've now seen through the first example that iterating on an audio plugin userface has a high cost. Here I make the case that we see the exact same issues in iterating on the DSP (Digital Signal Processing) within our plugins, stemming from some of the exact same causes.

I don't want to belabor the same points that I made above, so I'll only briefly consider our prior example in the context of writing the DSP: we want to move a filter from within our reverb processing unit to outside our reverb, somewhere "higher up" in the signal flow heirarchy. Owing again to our C/C++ object oriented best practices and the imperative programming model, we have several (or many) lines of code that we need to consider to take apart and wire up our processors again. This is the same exercise which is complicated by questions of lifetime, ownership, modularity, and reuse.

But in this case, things get complicated further still. When we consider these complications of writing DSP we have to do so in the context of running on a realtime rendering thread, on which we have the golden rules of avoiding memory allocations, avoiding locks, and generally avoiding anything which does not have a strictly determinstic execution time. Except, not all of this should actually be the responsibility of the realtime thread; constructing new class instances should likely be done on the main thread, addressing lifetime and ownership there as well. Then, of course some of our DSP operations we actually do want to run off the realtime thread, such as FFTs or biquad filter coefficient calculations, and propagate their results back to the realtime thread at an appropriate time. All of these details represent independent pieces of baggage (software programming problems, not problems strictly related to the audio process we're trying to describe) that we tend to carry into each and every one of our audio plugin development projects, raising the iteration time, thereby raising the cost of iteration (and the financial cost of the project), and slowing us down.

Elementary approaches this problem set with the same model that revolutionized frontend web development: a functional, declarative programming model. This is something I've written at length about already, and a point on which I intend to further elaborate in an upcoming presentation at ADC 2021, so I won't get into it much here. The short version is that we can express these same ideas about structure and heirarchy in signal flow through a declarative model, and defer all of the cognitive overhead of lifetime, ownership, and the underlying imperative procedures to the Elementary engine.

Moreover, we can do this using the same language, the same model, and from the same code that we use to approach building our user interface. We can do this using even the same tooling, which means that the Elementary Plugin Dev Kit will not only let you iterate on your user interface with hot reloading inside your DAW, but will let you iterate on your DSP with hot reloading inside your DAW at the same time. Welcome to 2021, audio software.

Shared State is Hard

Shared mutable state is the root of all evil.

I forget where I first heard that quote. After a quick internet search, it seems to have proliferated so widely that I'm not sure I'll be able to find the original source. I remember it well from the days of Pete Hunt giving the first few major talks on React.js around 2013, so I'll go ahead and attribute it to him. Either way, it's a statement that I firmly agree with to this day.

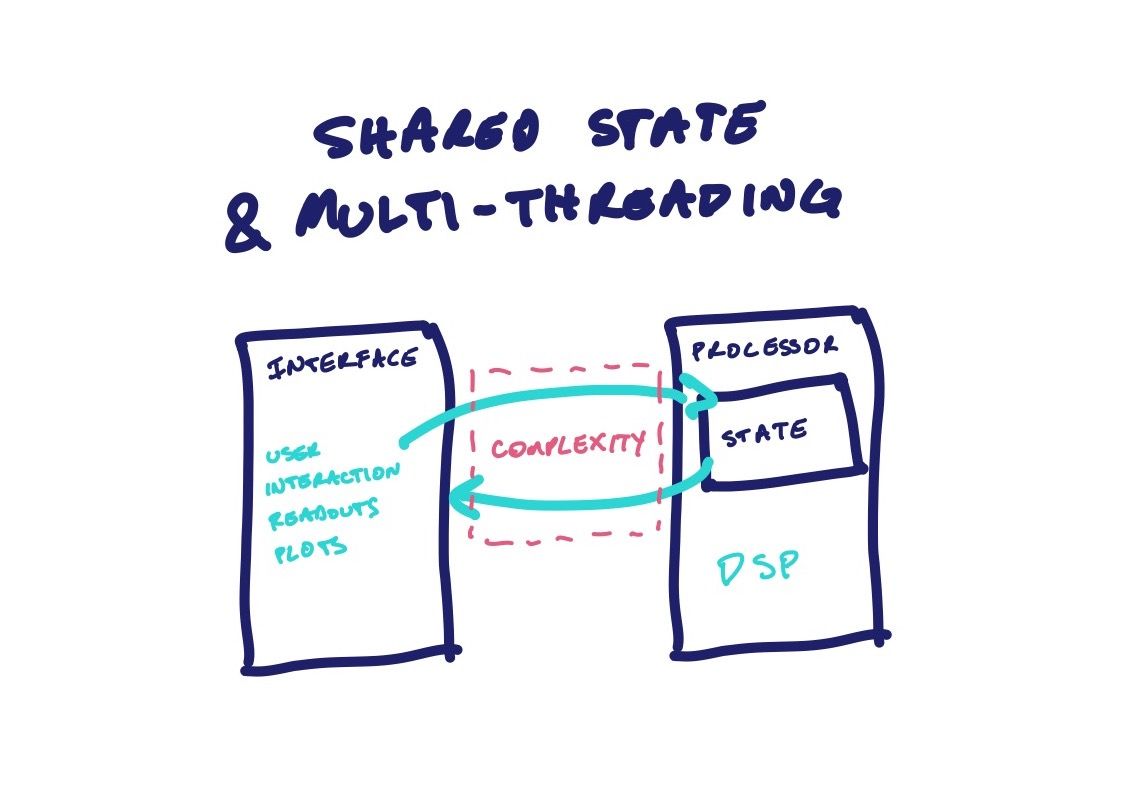

Let's imagine that we can virtually eliminate all of the problems complicating iteration speed from the prior examples– that we had well known and widely used solutions within the conventional C/C++ model for building audio plugins. Even then, we've only clipped the top of the iceberg of complexity, because underneath those problems lies a broader problem of how to share state within an audio plugin.

Classically, we structure our audio plugin software such that the processor owns our state, whatever that might be (often including at least the plugin parameter state), then any other piece of the software has to be careful with how it tries to interact with that state. Typically, sharing that state involves multi-threaded concurrency that must be lock-free to keep the realtime thread in good standing. For example, the user interface might try to write a new parameter value into the processor's state, but has to be careful to make those writes atomic. Our processor might update state values (such as peak meter levels of some internal signal) and leave the interface to query the new state at a later time, which again should probably be an atomic read. And this works out nicely enough when the state in question can trivially fit into atomic structures (like std::atomic<T>), but what about more difficult state? How do I represent and communicate, for example, that I want to completely remove a certain part of the signal flow? And how would I even do that on the realtime thread without invoking the complexities of memory management? Even some of the most experienced developers I know at big brand name companies have different ideas on how to approach this, sometimes going as far as completely duplicating the state between the UI and the DSP and trying to find some generic data replication scheme to keep the two in sync, freeing (or at least, trying to) the user interface from the problems of multi-threaded concurrency.

Shared mutable state is a notoriously difficult problem space (as is data replication), and it's only made more complicated in the world of audio programming via the fact that we try very hard to avoid whole categories of thread synchronization utilities in an attempt to keep the realtime thread free of non-deterministic actions. This is exactly why I place so much value in the way that Elementary allows you to treat your state: exactly the same as you treat your UI state. With Elementary, you can express your state as a distinct, plain old data structure, and write your application strictly as a function of that state. We've already seen what this can mean for radically simplifying application development in the way that React.js took over the frontend web development world. Now we have that same model for describing our DSP. There is no additional complication to the idea of state sharing here because there's effectively no second entity that you need to worry about: Elementary will take your signal flow description and manage all of the multi-threaded concurrency involved in realizing your rendering process.

Bringing the Event Loop to DSP

In the prior section, we focused on the fact that it can be the same state (literally the same JavaScript object), which decides the current look of your application and the behavior of the DSP– that there is no sharing, no multi-threading concurrency, no race conditions, just state. Our discussion there primarily concerns the direction of data flow from application state down to the rendered user interface and the actual signal processing chain, but what about the reverse direction? There are plenty of instances where we want to retreive state from the realtime thread: meters, oscilloscopes, FFT analyses. These bring back in the same set of concurrency complexities: circular buffers, atomics, lock-free queues, etc.

Here Elementary leans on some of the patterns long established in the JavaScript ecosystem, using an event loop to abstract over the business of the underlying thread behavior so that when some such thread has some information to share (say, a new value for a peak meter reporting on some gain signal in our DSP chain), we can propagate it to your code through an event dispatching architecture. That means that retreiving these values is as simple as declaring your tap points:

core.render(

el.meter({key: 'leftChannel'}, el.in({channel: 0})),

el.meter({key: 'rightChannel'}, el.in({channel: 1})),

);Then installing some simple event listeners for handling incoming value updates:

core.on('meter:leftChannel', function(value) {

// Do whatever you need! Draw to a canvas, update your React

// component state. It's all just JavaScript from here.

});Not only is this pattern established, it's proven for high performance applications via Node.js and libuv, and again it allows you to focus on the task at hand (e.g. getting data and drawing peak level meters) without pulling along all of the baggage of multi-threaded concurrency and lock-free programming. And after such a focus on iteration speed, it feels apt to point out that if you then wanted to change the tap point to monitor a different part of your audio signal chain, it's simply a matter of moving the el.meter() function within your function composition.

Conclusion

By now I hope it's clear that my goal with Elementary is not just to enable authoring audio plugins or apps with JavaScript, but to simultaneously eliminate or mitigate entire categories of software programming problems that complicate the audio development process. That we can write in JavaScript is icing on the cake– really, insanely delicious icing because it means that we can integrate the incredible tools and technologies coming out of the frontend web space into this new domain.

Through this goal, I think we can drastically improve the way that we write audio software today; developers will spend a larger portion of their time solving the problems that matter, and projects will move faster.

The Elementary Plugin Dev Kit is in private beta and will be widely available within the coming months. Visit the website, sign up for the waitlist, and you'll be the first to know.